When I first started out in design, my only motivation was to contribute something, to ‘save the world’. For my final year project I forewent personal comfort to platform an issue that affects so many of my community on a deep personal level.

Mosaic Voice is a consumer-viable Electroglottograph (EGG) designed to help transgender people (specifically trans women) perform voice therapy training.

Comprising of two parts, a wearable EGG, and a supporting app, Mosaic was conceptualised as an extensible system, providing the basic software, while region and use-case specific needs could be met via a library of plug-ins.

This was based on first hand experience and extensive research which indicated that any solution would be region-specific and require outside experience for each subsequent location / context it was to be applied to.

Voice Therapy Training

Voice therapy training is a sensitive and highly personal process which poses significant practical difficulty and emotional strain for trans people with vocal dysphoria. Resources are scarce and sparse; embarrassment pervades around stigma about adults performing vocal training.

Democratised design can facilitate this process by creating assistive tools and means to alleviate the emotional difficulties incurred.

Voice therapy training (VTT) is a broad term for practices and training regimes designed to modify the voice to take on a different appearance. It can be utilised by singers and actors but also by people recovering from injury and trans people, in particular trans women such as myself, who’s voices are not affected by hormone replacement surgery.

Issues with Specialist Help

Specialist tutors & therapists exist to help with this process, offering guidance, techniques, coaching and so on, usually over a long period of time. I personally make use of the excellent service by Christella Antoni, who takes a holistic approach to sessions, integrating social aspects and involving the client in the process. It should be noted that I am not affiliated with Christella Voice, my opinions are my own and genuine.

This works well to tackle the individualised nature of voice and offer professional guidance, the issues arrise with the fact that so few people actually perform this service. People travel from across the country to attend sessions because there’s nowhere closer, the cost of travel tickets exceeding the sessions. And even then, one person can only see so many clients.

There’s also the issue that many trans people are, for lack of a better term, broke.

Trans people are massively discriminated against in work, promotions, housing and healthcare, among other things. This leads to a significant majority living bellow the poverty line. This works to make access to services like Christella’s difficult for some to manage, then additionally, you must consider the viscous cycle that one’s ability to “pass” is largely dependant on voice, which has a compounding effect on discrimination faced, which leads to more poverty, and so on.

This leads to many of us (if not most at some point), turning to the idea of doing it yourself…

Issues with DIY

Many people turn to resources on the internet such as YouTube videos, the few training apps which exist, and the occasional Reddit post which is cited by everyone you know and threatens to dispensary one day. Also you have to tolerate looking at Reddit.

The issue boils down to the fact that, with say a YouTube video, you are seeing a “patient’s” personal reflection on what they deem to be the most memorable aspects of a highly personal journey, as opposed to structured content.

Even when structured content is available, it is often nullified by the lack of tutoring context and practice structure. There is an issue of “hearing yourself”, that is, gauging correctly where you’re progress stands, what you should be working on still, and knowing when to celebrate achievement.

Three Target Issues

This lead me to define three topic areas to explore as the basis for my dissertation

- Visualisation: concerning the issue of self-reflection, issues around hearing yourself and your progress

- Tool: A set of techniques and resources, not to supplant specialist support, but to aid in self-practice

- Goals: Looking at the auto-therapeutic aspect of VTT, aiming to address the physiological distress and discomfort as well as help people define obtainable targets.

Electroglottographs and Self-‘Visualisation’

A more industrial system by Larynograph. I include this image to highlight the connection with the ‘desktop’ version; that these are medical devices first.

The Larynograph site tells a story about how old / established this device is.

The interface of the desktop version of the Layrnograph

One of the most useful physical tools employed by voice therapists is an Electroglottograph, a device which provides in-depth data on the behaviour of the larynx and audio aspects which comprise the voice.

These devices are difficult to come by, large, clunky and expensive, hence few people who are not specialists are likely to own one.

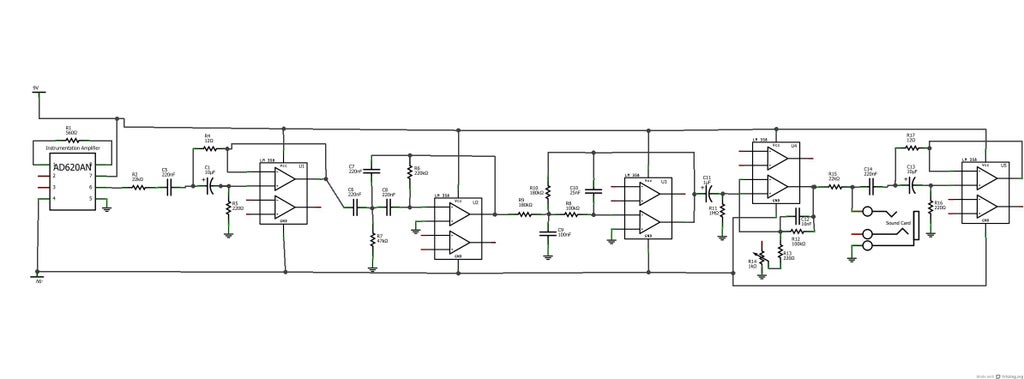

Their function is effectively quite simple, an electrostatic field is created across the contact probes which are pressed into the client’s neck. The various vibrations and noises interupt the field across various channels, which are then picked up by the device, and processed into usable data by the software.

Rethinking the EGG

A Precedent

Initially, I assumed that designing a new EGG was impossible, so I pushed the idea away. In early December (just as everything was winding down for the winter break), I came across two articles concerning the creating of DIY EGG devices.

I am research and engineering driven in my approach; I was not willing to create a project based on the assumption that a new type of EGG could be made without first seeing something to indicate it’s validity, and then packaging this in some way into a proof-of-viability / proof-of-concept.

The first resource I came across was this project by Marek Materzok on Hackaday.io which documented their process of making and refining an EGG device from scratch. There was not enough information to replicate the process but it offered a beginning insight into some of the challenges such a device would face (namely noise filtering and the best way to create the oscillation).

This lead me to this tutorial on Instructables of all places, DIY EEG (and ECG) Circuit by user Cah6 which gave details and specifications for building an Electroglottograph from simple components. This was all I needed to tell me that it could be done.

I made inroads into building my own version but decided to allocate my time elsewhere given how late on in the project I was.

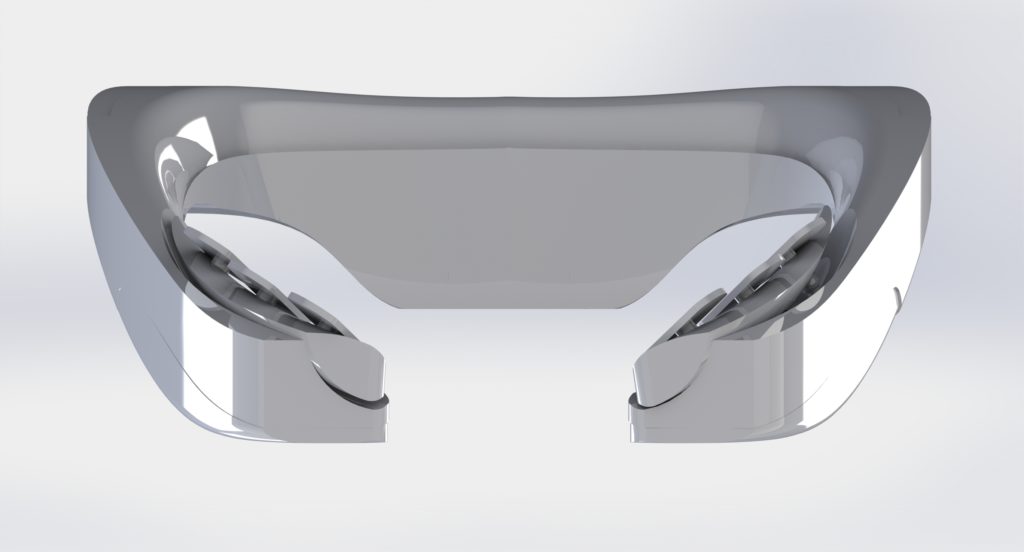

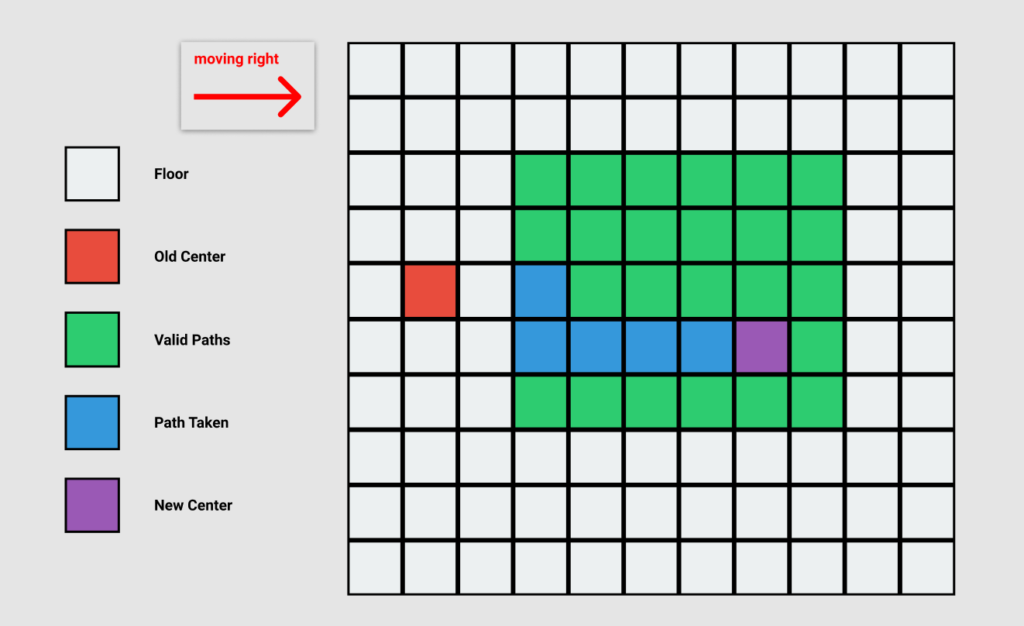

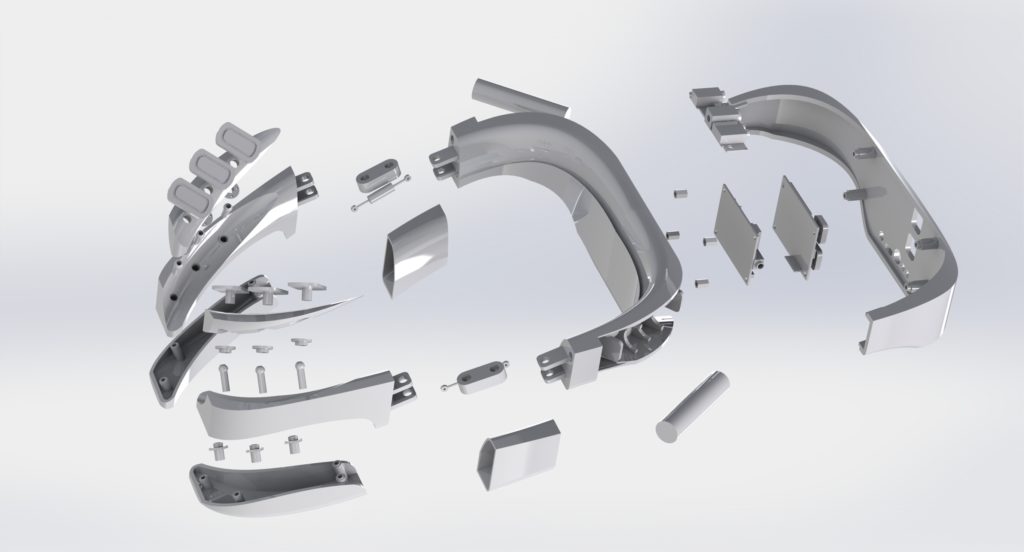

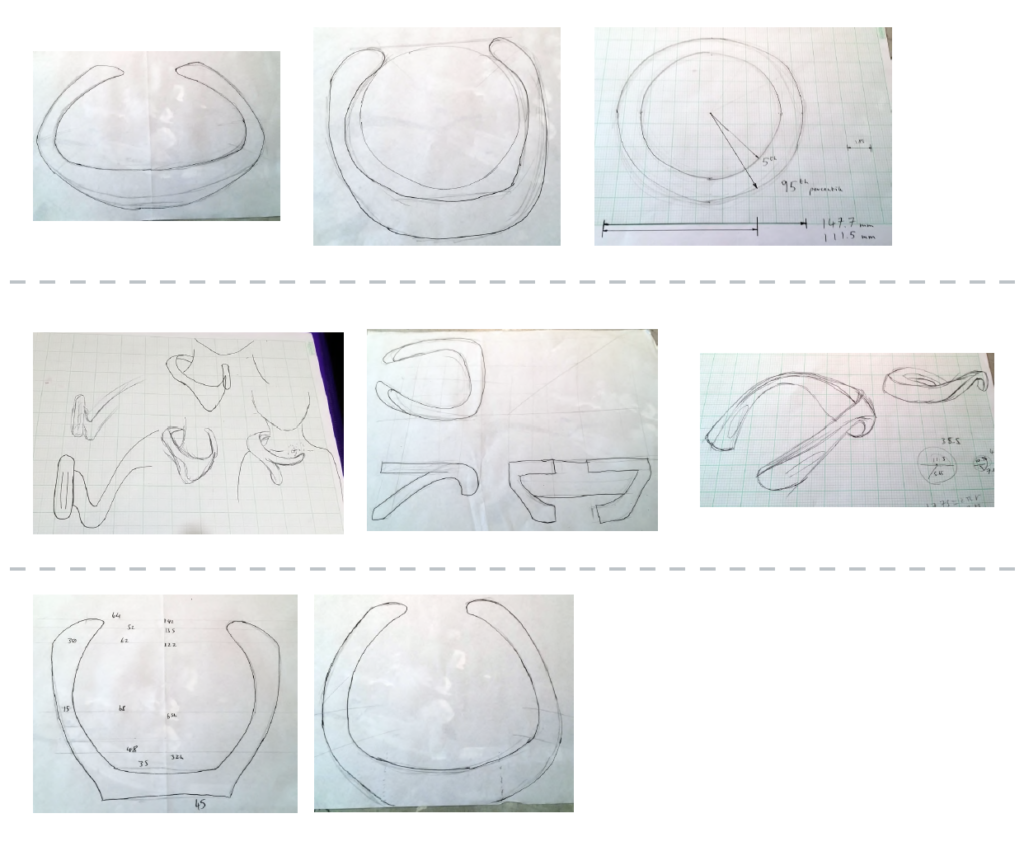

I decided on a wearable typology given the ergonomic difficulty encountered with the strap-on probes. The device would hook round the users neck, designed to cradle on the shoulders. The hinge components on each arm flex to adjust for neck sizes while retaining points of tension on the probes.

Most of the circuitry and interface buttons were placed on the back, with the batteries closer to the shoulder blades, this was to achieve weight balance on the arms but also ensure that any imbalance would only serve to pull the probes against the neck more, not slide off.

Chips on Both Sides

Using Cah6’s article as my template, I found an optimal size for the EEG control chip, which would be placed under another board which would handle interfacing with the ports and network. This second chip was designed around the Broadcom BCM 2835 controller used on the older Raspberry Pi’s given the low cost, versatility and proven record it provided.

Other smaller components such as the WiFi chip were also taken from the Pi series. Cost was a primary motivator with most of the deisgn descisions, given that this device had to be as low cost as possible.

The chassis is comprised of simple injection moulded nylon and designed to be easy to disassemble, repair, hack, etc. Screws are standard size and not hidden, components are all accessible, the batteries are lithium-ion AAA’s so can be swapped out at any time.

Motivation and Repetition

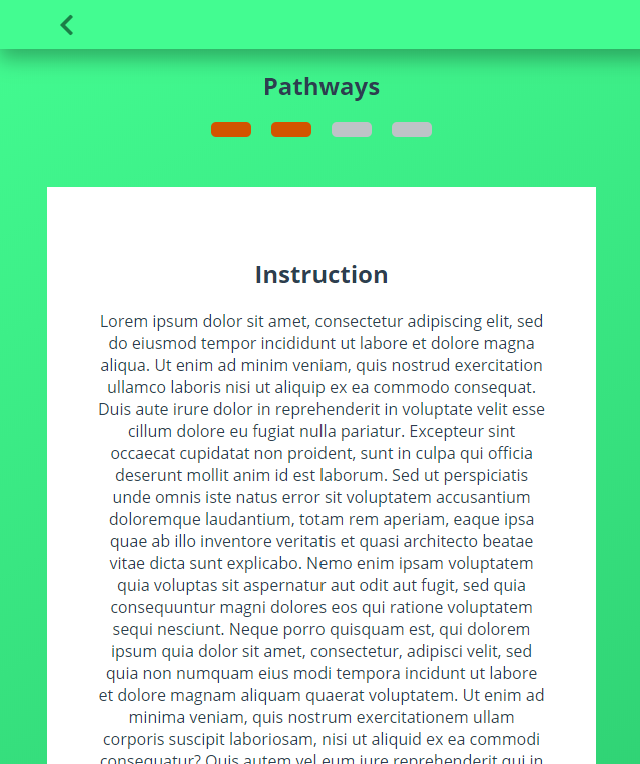

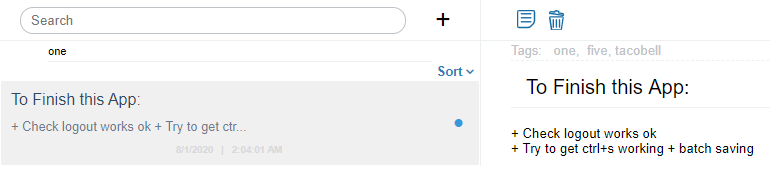

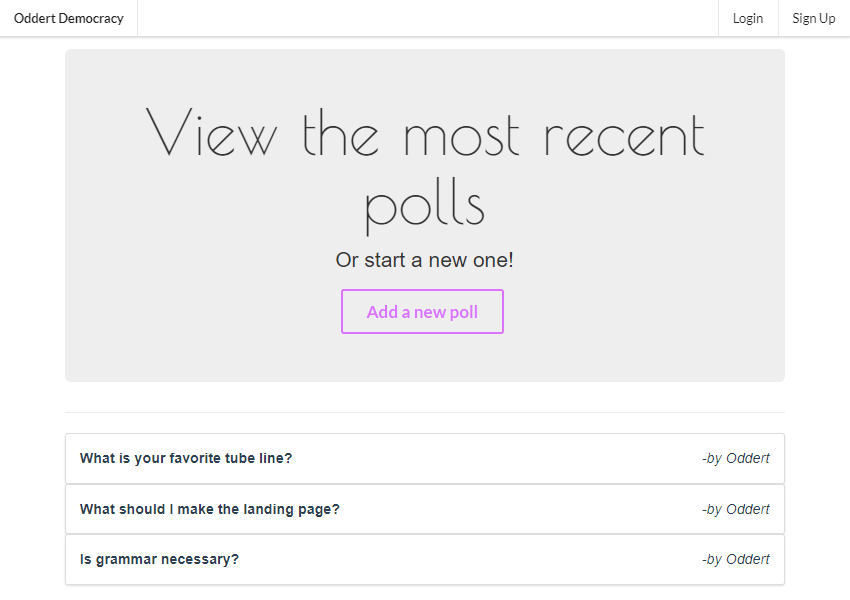

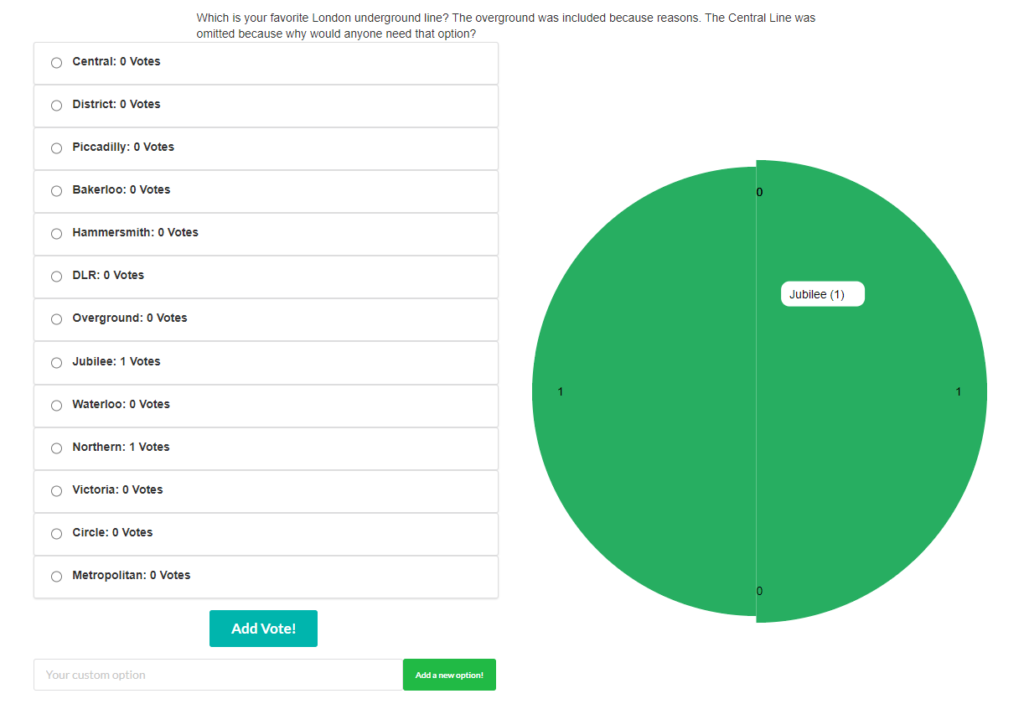

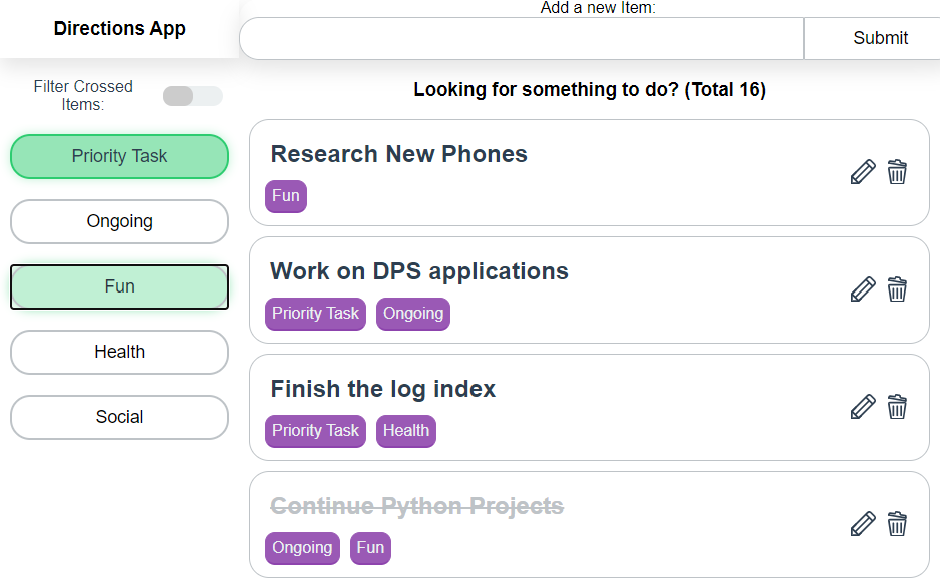

For the software end, I layout out a feature map for a mobile app including:

- A modular daily training system: Inspired by the Enki app, this would show ‘Pathways’ which would utilise the following tools to guide users though speaking exercises.

- A set of quick practice tools: These would show a simple animation or instruction and allow the user, in bursts of 30 seconds or so, to practice some aspect of breathing or warm-up.

- A pitch sample recorder: An area to record and sample the voice over a piece of sample text to view pitch over time.

- A resonance estimator (using neural networks): While the EGG is needed for accurate resonance sampling, this would provide a middle ground for people without financial access. Using a pre-trained convolutional network, an ‘estimation’ of resonance levels could be pooled. This would record the samples in the same area as the pitch sample recorder.

- A continuous listening sampler: Somewhat experimental, this functionality would note samples throughout the day of the user’s voice as they perform their daily activities. This could be used by the user to see how they remember their training in various, uncontrolled environments.

- A voice pattern matcher: Would depend on finding the right region-specific data set. Another convolutional network would match the user’s voice with one that sounded similar in most respects but could be adjusted for vocal features. This could then be used to practice against and set goals for the user to aim for.

- A voice creator (neural networks): Would depend on finding the right region-specific data set, a recursive generator neural network would modify the input voice to be adjusted for vocal features such as softness, tone, pitch, resonance, etc. This would allow the user to, for example “gender swap” their voice to try it out.

I built the frame for a progressive web app to demo these features which I could implement now, and provide dummy data for items that would require live data.

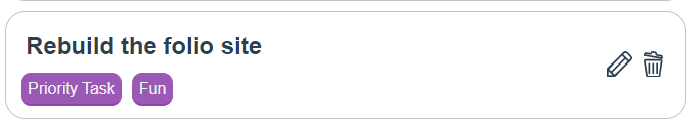

Nuemorphism

At the time (late 2019), there was speculation rising about the concept of “Nuemorphism”, coined as a play on “skeuomorphism” by Devanta Ebison. I hadn’t made up my mind about the style but I saw potential for a textureful and soft, welcoming interface which would be great to try for the app.

The result was a pleasing warm aesthetic, I especially liked such items as the progress indicators which felt like little gems that you wanted to have, empty ‘slots’ for the unfilled sections.

I’d like to write extended thoughts about the topic at some time, but sufice to say, while I liked the unique aesthetic of this app, it only worked due to the colour contrasts and would have faltered slightly if I had followed the pattern where a ‘raised’ section is the same colour as the background.

This is one of the critical flaws with nuemorphism (and skeuomorphism to a lesser degree), it’s smooth transitions and drop-shadow facilitated layer / element separation are often incredibly low contrast. This is a problem on displays with lower contrast settings or fewer colour bands, items viewed at any sort of distance, and of course, accessibility.

The advantage of more Matrial-UI esque drop shadow element-separation is that you can still use other features such as subtle borders to add definition and get round this issue. Even skeuomorphism (which, for the record, I am not a fan of), relies on heavy gradients and colour mixing to get it’s textured effect.

Reflections

The project concluded successful, I got an A and the presentation was received well. Its one of my favourite pieces of work and I’m proud to have it as my final year project.

But I still have hang ups.

This was, for all intents The Big One™, the final year project, and more than that, it was something I so closely believed in. It wasn’t enough for it to be good or even great, it had to be a master-piece.

I was afraid my work couldn’t speak for itself

I kept diversifying the system while not building on what was there. It’s true that the solution should take the form of an integrated system but I remember over and over again not being sattisfied with what I had, constantly striving for something to truley step up to the next level.

In reality, I was having a crisis of design, I saw myself perched between two worlds, one of Product Design, and the other of Engineering / Code. I told myself over and over that there was no divide, that we all have ranges of skillsets but I couldn’t chake the feeling that I was a jack of all trades and a master of none.

So I kept adding ‘stuff’, imagining the basics of a complex system and then trying to work back from there (the egineering approach when you actually know what that system is). I spent a month on a little breathing excersiser device before stepping back to ask “what the * am I doing? What is this?”

When I shifted hard into the EGG route, I was doing so over the break, working tirelessly while others were relaxing, just to catch up.

I used my specialisation as justification for not re-evaluating

Perhaps what is worse is that the warning signs were there; clear indicators that I should clear my head, define one or two things that the product had to do, and just worked on those.

I let myself believe (not incorrectly) that I was uniquely positioned to pull off engineered solutions comepletly unlike anything my collegues could do, due to my specific code based skills. This is a mistake that would unfortunately not fully reveal itself until Tailored Nutrition.

In doing so, I chased these mutliple vauge threads instead of simply doubling down on the core that ended up being the final outcome. I ended up working twice as hard as some but still “only” producing what I could have under older circumstances.

I assumed that I could just grind to finish

Perhaps the most egregious mistake I made whilst all this was going on was the assumption alluded to, that I would miraculously pull off the feat at the last minuite. As I said, I did do that, but for an outcome which could have happened regardless.

I made a habbit of being in the studio from 10:00 until 23:00, calculating when would be the most ‘efficient’ time to drink caffinated drinks, pushing msyself beyond physical limits. I could have halved that time, used the remaining to rest, research, regain my humanity, but instead I saw time as a commodity to be collected and horded as much as possible.

We all have pressing deadlines from time-time. But if you begin to see time itself as an enemy, its overdue you stop and reevaluate.